Does the Use of Pre-release Material Affect the Validity of the Assessment of Programming Skills?

| ✅ Paper Type: Free Essay | ✅ Subject: Teaching |

| ✅ Wordcount: 3977 words | ✅ Published: 23 Sep 2019 |

Does the use of pre-release material affect the validity of the assessment of programming skills in Cambridge IGCSE™ Computer Science?

Introduction

Programming is an important construct of computer science. In Level 2 qualifications, students are required to develop their programming skills practically. “The content…requires students to develop their ability to design, write, test and refine computer programs…” (Ofqual, 2018, p. 3).

The assessment of programming is still posing a challenge for awarding bodies. The UK GCSE consists of a non-examined assessment (NEA) comprising 20% of the overall final grade. Recently, Ofqual became aware of instances of malpractice (e.g. teacher pressure on getting good results, solutions appearing on the internet, searching for one ideal solution to programming problems that can be easily learnt) and responded by informing awarding bodies that future assessments would be by written examination only (Ofqual, 2018, 2019). Ofqual then released a consultation and provided awarding bodies with ten options for how to assess programming. The conclusion was that the assessment of programming should always be by written examination. Whilst the consultation would have no impact on the Cambridge IGCSE[*], one of the options made a direct link to the risks of using pre-release materials (PRM).

“…There are risks to the pre-release approach…primarily around the extent to which the questions asked in the exam may become predictable…encourage question-spotting…have an impact on validity and narrow teaching and learning.” (Ofqual, 2018, p. 15)

Keeping programming central to the IGCSE is important as we want to assess programming authentically and validly. The computer science IGCSE prepares students who want to become programmers and prepares students who want to pursue other roles within computing. Cambridge Assessment (Cambridge) regularly review their international qualifiations against a code of practice and computer science is currently being reviewed for first assessment 2023. A decision on the future of the PRM is imminent. If the PRM are a threat to validity, steps will be taken to ensure that validity is not compromised (Cambridge Assessment, 2017).

What are PRM?

PRM are pre-exam resources for the teacher to use that act as a stimulus (Swann, 2009). PRM are used in similar way to coursework to increase the authenticity and the construct representation (validity) of the qualification (Crisp, 2009; Johnson & Crisp, 2009). Little research exists on the use of PRM. Ofqual suggests them as a viable option to assessing programming, therefore it is important to investigate why they are used and whether their use is valid.

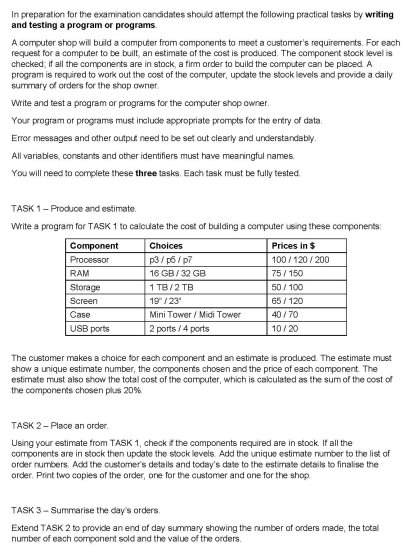

PRM are used in computer science to ensure that teachers give their students some hands-on programming experience. Tasks are written so that they can be solved in different ways. This encourages students to explore and try different solutions. The PRM require learners to write and test a program or programs. An example is shown in Figure 1. PRM are made available online after each exam session. So it is relatively easy for teachers and students to question spot and predict tasks as they are predictable and formulaic. This brings their use into question as this would be a threat to validity.

Figure 1: Example PRM used in IGCSE Computer Science (Cambridge Assessment International Education, 2018).

The validity of PRM

Is validity finite or a series of never-ending sliding scales? (Cronbach, 1988; Messick, 1979). The 1921 definition of validity of whether a test measures what it is supposed to measure and “provided the foundation for all subsequent thinking on validity” (Newton & Shaw, 2014, p. 18) is relevant today (Borsboom, Mellenbergh, & van Heerden, 2004). However, as the PRM are not the ‘test’, this simple definition does not help. The 1921 definition, whilst simple and easy to understand is actually quite narrow and renders the PRM out of scope.

If a valid assessment is something that tests the content, skills and understanding that it intends to measure (Newton & Shaw, 2014), we can still conclude that the PRM are out of scope as they are not the assessment. Messick defined validity as “an integrated evaluative judgement of the degree to which empirical evidence and theoretical rationales support the adequacy and appropriateness of inferences and actions based on test scores or other modes of assessment” (Messick, 1989, p. 13). The ‘other modes of assessment’ is interesting here as it opens up the concept of validity to other aspects and not just the test or test scores – anything related to the test could affect validity. Now PRM are in scope. Wiliam described the quality of assessments to be determined by “reliability, dependability, generalisability, validity, fairness and bias” (Wiliam, 2008, p. 123). Validity has been described as “an evolving concept” (Cizek, 2012, p. 41; Wiliam, 2008, p. 125) and whilst I tend to agree with this, I am conflicted because the concept has grown into a bloated and amorphous concept, is confused and provides a lack of consensus between the various camps of validity theorists. It is also unlikely that there will ever be a consensus, because views on validity have changed and will carry on changing in the future (Newton & Shaw, 2014).

The validity concept can be deconstructed into many aspects:

- face validity – does the assessment look like it is testing what it supposed to?

- content validity – are the syllabus topics appropriately covered?

- construct validity – are the syllabus constructs appropriately covered?

- predictive validity – (criterion-related validity) does the assessment identify candidates who will succeed and who will not?

- consequential validity – how are the assessments used? (Isaacs, 2013).

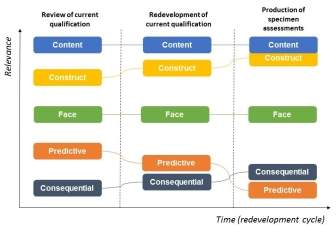

Content, construct and predictive form a traditional view of validity (Crisp, 2009; Cronbach & Meehl, 1955). It is useful to think of all these concepts as being associated with a qualification during a qualifications redevelopment. However, each aspect will have differing relevance during the redevelopment cycle (depicted in Figure 2).

Figure 2: The relevance of the various aspects of validity during the redevelopment cycle of a qualification.

Face validity is subjective, without evidence, and based on faith (Messick, 1989). Downing claimed that face validity is “sloppy at best and fraudulent and misleading at worst” (Downing, 2006, p. 8). I agree that face validity is a weak viewpoint. For example, we could say that the PRM have face validity, but without evidence our claim is baseless. We need to look ‘under the hood’ of the qualification and investigate other aspects of validity.

Content validity refers to the content of the subject and whether the assessment samples that content (AERA, APA, & NCME, 1999). The PRM consist of programming content which is part of the computer science syllabus, but does that mean we can claim content validity? It has been argued that content validity is a property of candidates responses to questions rather than the questions themselves (Lennon, 1956; Newton & Shaw, 2014). This view of content validity helps us as PRM are created to illicit programming responses. It is here though that the lines between content and construct become blurred as programming is part of both the content and construct of the qualification.

Construct validity can also be evidenced by using the syllabus document. Messick stated that “all measurements should be construct referenced” (Messick, 1975, p. 957), which was backed up by Guion “all validity is at its base some form of construct validity” (Guion, 1977, p. 410). Messick concluded that “…construct validity is indeed the unifying concept of validity…” (Messick, 1980, p. 1015). The terms construct under-representation and construct irrelevance variance are threats to this aspect of validity. The Messick conclusion is not perfect as validity can be seen as much more than the constructs associated with a test (Kane, 2001). As programming is part of the assessment objectives, we can claim that the qualification has construct validity and does not suffer from construct under-representation. However, the PRM might suffer from construct-irrelevant variance affecting our construct validity claim. This view of validity was investigated in the use of PRM for GCSE Geography. It was concluded that a variety in assessments create the capacity to assess multiple constructs, which enhances the construct validity (Johnson & Crisp, 2009) – there are multiple constructs associated with the computer science qualification, so the qualification has construct validity.

Predictive validity, describes whether the predictive properties of a test can be used to validate it (Wiliam, 2008). This is harder to evidence in my context as the evidence is linked to the syllabus aims. Do students who study computer science with practical programming succeed in the future? This is one of the aspirations of the syllabus and is something that we hope is true and is why the PRM exist. Because of the difficulty in evidencing this in a newly revised qualification predictive validity would have a lower relevance when compared with other aspects of validity. However, at the start of the redevelopment we could look at the grades of students at IGCSE and match them with their grades at AS & A Level to ascertain whether there were any issues with predictive validity.

Consequential validity, is even harder to evidence and it has been argued that consequential validity is different from the other aspects of validity (Kane, 2001; Koretz, 2009). During the redevelopment of a qualification, consequential validity is usually of low relevance, but in the context of PRM, I would argue that it would be highly relevant as we would hope that exams officers, employers etc. making inferences on candidates are as we intended (i.e. accurate). This relies on us making valid assessments. Validity has been described as “the extent to which the evidence supports or refutes the proposed interpretations and uses of assessment results” (Kane, 2006, p. 17). Not everyone agrees with this liberal view of validity and recently there has been a resurgence in a more conservative view of validity, which takes us back to the test rather than inferences (Newton & Shaw, 2014). It is not surprising that a re-emergence of a more conservative (and in some case hyper-conservative) view of validity has come about as more additional baggage has been bolted on, creating confusion. This helps us to underpin the evolving (if somewhat amorphous) concept described by Wiliam and supports the apparent lack of consensus.

Whether validity is about a test or the inferences being made, is a ‘chicken and egg’ scenario. An interplay between the various aspects of validity therefore must exist and this links to the unified concept of validity (Messick, 1980). However, in redeveloping qualifications, it is useful to deconstruct validity into its separate aspects and take a pragmatic view of each, although it is worth remembering not to lose sight of the bigger picture. It is therefore important to separate measurement, decision and impact. (Borsboom & Mellenbergh, 2007; Newton & Shaw, 2014). This helps when thinking about redeveloping qualifications. The properties of a test will affect the inferences and vice versa (Cizek, 2012). For example, the make-up of the content and constructs in computer science related to the assessment will affect the inferences made on candidates who sit the exam and the inferences will affect the redevelopment of a qualification, especially if the inferences are inaccurate. This symbiotic relationship means that both scientific and ethical judgements should co-exist – for if the inferences made on students are inaccurate (validity is compromised) and if the test does not meet quality standards (validity is compromised) and the inferences will be inaccurate (validity is compromised) (Cizek, 2012).

We want to make inferences on computer science students on all aspects of computer science – theory, computational thinking, problem solving, programming skills. However, assessing these skills validly and reliably can be an issue (Ofqual, 2018).

The reliability of assessing programming skills (even with PRM) can be affected by a number of things. For example, students have good and bad days; they might be ill when the PRM are covered in class, or the tasks could be more suitable to a particular group (or groups) of students. For this reason, it could be argued that PRM should not be used at all. But what about the teaching of practical computer science? There is a parallel here with science. Cambridge still offers a practical test alongside an alternative to practical. Because of the balance of theory and practical that is being taught in the classroom there is concurrent validity between the two test. This was recently proved by an internal comparability study on the tests. However, as the pressure to get good grades mounts on both teachers and learners (Popham, 2001), less time will be spent on practical elements. Assessment designers need to keep these pressures in mind when designing valid assessments.

Designing valid assessments

Assessment designers are required to create valid assessments. The assessment developer needs to minimise threats to validity. This is underpinned in one of the ten principles of validity by Cambridge, “Those responsible for developing assessments should ensure that the constructs at the heart of each assessment are well grounded.” (Cambridge Assessment, 2017, p. 13). Cambridge defines validity (and validation processes) as “a pervasive concern which runs through all its work on the design and operation of assessment systems” (Cambridge Assessment, 2017, p. 12).

The main threats to validity that need to be minimised or avoided are inadequate reliability, construct under-representation, construct-irrelevant variance, construct-irrelevant easiness and construct-irrelevant difficulty (Cambridge Assessment International Education, 2017; Newton & Shaw, 2014). However, assessment designers need to be careful as focusing on one threat of validity could inadvertently create (or affect) another threat to validity (Cambridge Assessment, 2017). For example, we would not replace the PRM with an multiple-choice question (MCQ) paper. Whilst the reliability of the assessment would be increased, we would end up increasing construct under-representation as it would be difficult to assess the programming construct effectively. It would also increase construct-irrelevant variance as MCQs can be easy to practice for and can become more about test-taking skills rather than what the student actually understands. There would also be issues with the consequential validity here as we would not be assessing programming skills authentically. Therefore, the inferences that would be made would be inaccurate.

Threats to validity, such as, low motivation, assessment anxiety, inappropriate assessment conditions and task or response not communicated could be present. It is generally accepted that the use of PRM can improve motivation and lower assessment anxiety (Johnson & Crisp, 2009). This would have a positive impact on the outcomes for these students. However, significantly positive (or negative) inferences would compromise the consequential validity as inferences made about students would be inaccurate.

The threats to consequential validity and threats associated with bias pose questions over fairness and whether Cambridge has an ethical duty to candidates ensuring that inferences being made about them are as intended (accurate).

Fairness and PRM

Fairness “is subject to different definitions and interpretations in different social and political circumstances” (AERA et al., 1999, p. 80). Test developers need to “address fairness in the design, development, administration and use” of a test (Educational Testing Service (ETS), 2002, p. 18).

Fairness in the general public is linked directly with the equality of test scores, which is wrong (Zieky, 2006). Fairness is much more than just the equality of test scores, which is seen in how PRM are used in practice, especially in a variety of cultural settings.

“If something looks unfair, then it is believed to be unfair” (Zieky, 2006, p. 362). I would challenge this view as, just like face validity, is a subjective argument with little evidence. The PRM do not look unfair but that does not mean that they are fair. Zieky went on to say “because fairness is linked to validity and validity is linked to the purpose of a test, an item may be fair for one purpose and unfair for a different purpose” (Zieky, 2006, p. 363). The PRM for computer science are linked to the items on the test – and I would argue that the PRM are items within the test. Therefore, just because the PRM looks fair, if the administration of the PRM is not fair then the validity would be compromised (Ofqual, 2018).

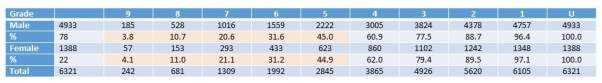

Validity and fairness are linked (Gipps & Murphy, 1994). Generally, computer science tends to appeal (and in some case favour) boys over girls. Boys are therefore more likely to become familiar with technical computing terms than girls (Wong, 2016). I disagree with this because recent grade statistics (Figure 3) show that the performance of girls at grade 5 (and above) is equivalent with boys.

Figure 3: Pearson Edexcel grade statistics for GCSE Computer Science, total cohort – June 2018 (Pearson Edexcel, 2018).

There is still disagreement about the meaning of fairness in assessment (Zieky, 2006). Zieky states that “…interpreting the scores on a test as a measure of innate ability when people in different groups did not have an equal opportunity to learn the material being tested is unfair” (Zieky, 2006, p. 375).

This has direct parallels with the use of (and misuse of) PRM. One of the main issues with the PRM for the IGCSE is the inability to control what happens to the materials once they are in the hands of the exams officer or teacher. Different groups of students could be disadvantaged in terms of having equal opportunity with the PRM. Therefore, this would be classed as an unfair situation and provide bias affecting inferences being made on some candidates. If fairness is linked to the validity of the assessment, then this would be an argument supporting PRM as a threat to the validity. In an age where fairness is at the front of people’s minds, we would take this as a big threat that needs to be addressed (Cambridge Assessment International Education, 2017).

Conclusion

Using PRM in the assessment of programming for computer science will have an effect on the overall validity of the qualification. Whilst good assessment design would ensure that the PRM have face, content and construct validity, it is difficult to ensure predictive and, possibly most importantly, consequential validity. The consequence of bias towards students described in this essay lead to issues of fairness with the PRM and hence the consequential validity – this is unacceptable. PRM force schools to do a specific task set by us. This limits the programming the school can teach. They cannot design tasks that suits their learners (e.g. differentiation) or to do other tasks that test the same constructs. The tasks on the PRM are also predictive (Ofqual, 2018). With an increasing appetite for pre-worked solutions, teachers begin to teach to the test because of the pressure being placed on them to get good grades (Popham, 2001). This would affect the inferences being made on candidates and is another threat to the consequential validity. This leaves us with the conclusion that the PRM, whilst being valid for content and construct (and face) have the potential to cause issues with the inferences we want to make on candidates who take the qualification. If we interpret validity as inferences rather than properties of a test and use the evidence presented, PRM use would be a threat to the validity of the qualification.

Future recommendations

There is no perfect solution for assessing programming skills authentically (Ofqual, 2019) and the Computing At School (CAS) group agree. CAS support the use of PRM because they promote programming in the classroom. However, they did propose an additional option that used PRM along with an on-screen examination, as adopted by Oxford AQA. CAS argue that PRM can support all students and that they help weaker students access the questions in the examination. They also say that the students understanding of the PRM will enable them answer the exam questions better through analysis of the novel code or scenario (CAS, 2018). The issue of fairness in the international release of PRM would still exist and I wonder if Oxford AQA also have malpractice issues with their PRM? Also, the effect of testing programming in an on-screen environment need to be investigated. It might appear to be a more authentic way of testing programming skills, but it might only end up making the assessment more logistically challenging and increase the potential for construct-irrelevant variance.

The most likely outcome for the PRM is to remove it from the IGCSE assessment and replace it with more structured questions already present. This would remove the problem around bias and fairness and minimise the threat to consequential validity. However, in doing this there could be negative impacts on validity if we observe any negative washback into the classroom. Therefore, before decisions are made to remove the PRM, we would need to consult our examiners, teacher and students. If PRM are removed we would need to be monitor the situation closely, provide support to our teachers to ensure that programming remains a central part of their teaching, their students learning and our valid assessment of computer science.

References

- AERA, APA, & NCME. (1999). The Standards for Educational and Psychological Testing. Washington, DC: AERA.

- Borsboom, D., & Mellenbergh, G. J. (2007). Test validity in cognitive assessment. In Cognitive diagnostic assessment for education: Theory and applications (pp. 85–115). New York, NY, US: Cambridge University Press.

- Borsboom, D., Mellenbergh, G. J., & van Heerden, J. (2004). The Concept of Validity. Psychological Review, 111(4), 1061–1071.

- Cambridge Assessment. (2017). The Cambridge Approach to Assessment Principles for designing, administering and evaluating assessment. Cambridge Assessment.

- Cambridge Assessment International Education. (2017). Code of Practice. Cambridge Assessment.

- Cambridge Assessment International Education. (2018). Cambridge IGCSE Computer Science Paper 21 Pre-release Material. Cambridge Assessment. Retrieved from https://schoolsupporthub.cambridgeinternational.org/past-exam-papers/?keywords=0478&years=2018&series=June

- CAS. (2018). Computing at School: Response to Ofqual Consultation. Ofqual.

- Cizek, G. J. (2012). Defining and distinguishing validity: interpretations of score meaning and justifications of test use. Psychological Methods, 17(1), 31–43.

- Crisp, V. (2009). Does assessing project work enhance the validity of qualifications? The case of GCSE coursework. Educate~, 9(1), 16–26.

- Cronbach, L. J. (1988). Five perspectives on the validity argument. In Test validity (pp. 3–17). Hillsdale, NJ, US: Lawrence Erlbaum Associates, Inc.

- Cronbach, L. J., & Meehl, P. E. (1955). Construct validity in psychological tests. Psychological Bulletin, 52(4), 281–302.

- Downing, S. M. (2006). Face validity of assessments: faith-based interpretations or evidence-based science? Medical Education, 40(1), 7–8.

- Educational Testing Service (ETS). (2002). ETS standards for quality and fairness. Princeton, NJ.

- Gipps, C. V., & Murphy, P. (1994). A fair test? assessment, achievement, and equity. Buckingham [England] ; Philadelphia: Open University Press.

- Guion, R. M. (1977). Content validity: three years of talk – what’s the action? Public Personnel Management, 6(6), 407–414.

- Isaacs, T. (Ed.). (2013). Key concepts in educational assessment. London: SAGE.

- Johnson, M., & Crisp, V. (2009). An Exploration of the Effect of Pre-Release Examination Materials on Classroom Practice in the UK. Research in Education, 82(1), 47–59.

- Kane, M. T. (2001). Current Concerns in Validity Theory. Journal of Educational Measurement, 38(4), 319–342.

- Kane, M. T. (2006). Validation. In R. L. Brennan, National Council on Measurement in Education, & American Council on Education (Eds.), Educational measurement (4. ed). Westport, Conn: Praeger Publ.

- Koretz, D. M. (2009). Measuring up: what educational testing really tells us. Cambridge, Mass.: Harvard University Press.

- Lennon, R. T. (1956). Assumptions Underlying the Use of Content Validity. Educational and Psychological Measurement, 16(3), 294–304.

- Messick, S. (1975). The standard problem: Meaning and values in measurement and evaluation. American Psychologist, 30(10), 955–966.

- Messick, S. (1979). Test Validity and the Ethics of Assessment. ETS Research Report Series, 1979(1), i-43.

- Messick, S. (1980). Test validity and the ethics of assessment. American Psychologist, 35(11), 1012–1027.

- Messick, S. (1989). Validity. In R. L. Linn, National Council on Measurement in Education, & American Council on Education (Eds.), Educational measurement (3rd ed). New York : London: American Council on Education : Macmillan Pub. Co. ; Collier Macmillan Publishers.

- Newton, P. E., & Shaw, S. D. (2014). Validity and educational assessment (1st ed). Thousand Oaks, CA: Sage Publications.

- Ofqual. (2018). Future assessment arrangements for GCSE Computer Science. Ofqual.

- Ofqual. (2019). Decisions on future assessment arrangements for GCSE (9 to 1) computer science. Ofqual.

- Pearson Edexcel. (2018). GCSE Computer Science Grade Statistics. Pearson Edexcel. Retrieved from https://qualifications.pearson.com/en/support/support-topics/results-certification/grade-statistics.html?Qualification-Family=GCSE

- Popham, W. J. (2001). Teaching to the Test? – Educational Leadership. Educational Leadership, 58(6), 16–20.

- Swann, F. (2009). The new OCR specification. Sociology Review, 19(1), 14+.

- Wiliam, D. (2008). Quality in Assessment. In Unlocking Assessment: Understanding for Reflection and Application (1st ed., pp. 123–137). David Fulton Publishers. Retrieved from https://libsta28.lib.cam.ac.uk:3148/lib/cam/reader.action?docID=332077&ppg=138

- Wong, B. (2016). ‘I’m good, but not that good’: digitally-skilled young people’s identity in computing. Computer Science Education, 26(4), 299–317.

- Zieky, M. (2006). Fairness Review in Assessment. Routledge Handbooks Online.

[*] For clarification purposes, the Cambridge IGCSE does not contain an NEA component. Programming skills are assessed in a written examination, partly made up of questions based on PRM. The percentage of programming assessed in this qualification is comparable to the UK GCSE.

Cite This Work

To export a reference to this article please select a referencing stye below:

Related Services

View allDMCA / Removal Request

If you are the original writer of this essay and no longer wish to have your work published on UKEssays.com then please click the following link to email our support team:

Request essay removal