Facial Emotion Recognition Systems

| ✅ Paper Type: Free Essay | ✅ Subject: Engineering |

| ✅ Wordcount: 2203 words | ✅ Published: 31 Aug 2017 |

CHAPTER-1

INTRODUCTION

1.1: Introduction

Face plays important role in social communication. This is a ‘window’ to human character, reactions and ideas. the psychological research shown that nonverbal part is the most enlightening channel in social communication. Verbal part offers about 7% of the message, vocal – 34% and facial expression about 55%.

Due to that, face is a theme of study in many areas of science such as psychology, behavioral science, medicine and finally computer science. In the field of computer science much effort is put to discover the ways of automation the process of face detection and segmentation. Several methods addressing the problem of facial feature extraction have been proposed. The key problem is to provide suitable face representation, which leftovers robust with respect to diversity of facial appearances.

The method of face recognition plays an important role in people’s life ranging from commercial to law enforcement applications, such as real time surveillance, biometric personal identification and information security. It is one of the most challenging topics in the interface of computer vision and cognitive science. Over past years, extensive research on face recognition has been conducted by many psychophysicists, neuroscientists and engineers. In general views, the definition of face recognition can be formulated as follows Different faces in a static image can be identified using a database of stored faces. Available collateral information like facial expression may enhance the recognition rate. Generally speaking, if the face images are sufficiently provided, the quality of face recognition will be mainly related to feature extraction and recognition modeling.

Facial emotion recognition in uncontrolled environments is a very challenging task due to large intra-class variations caused by factors such as illumination and pose changes, occlusion, and head movement. The accuracy of a facial emotion recognition system generally depends on two critical factors: (i) extraction of facial features that are robust under intra-class variations (e.g. pose changes), but are distinctive for various emotions, and (ii) design of a classifier that is capable of distinguishing different facial emotions based on noisy and imperfect data (e.g., illumination changes and occlusion).

For recognition modeling, lots of researchers usually evaluate the performance of model by recognition rate instead of computational cost. Recently, Wright and Mare ported their work called the sparse representation based classification (SRC). To be more specific, it can represent the testing image sparsely using training samples via norm minimization which can be solved by balancing the minimum reconstructed error and the sparse coefficients. The recognition rate of SRC is much higher than that of classical algorithms such as Nearest Neighbor, Nearest Subspace and Linear Support Vector Machine (SVM). However, there are three drawbacks behind the SRC. First, SRC is based on the holistic features, which cannot exactly capture the partial deformation of the face images. Second, regularized SRC usually runs slowly for high dimensional face images.

Third in the presence of occluded face images, Wright et al introduce an occlusion dictionary to sparsely code the occluded components in face images. However, the computational cost of SRC increase drastically because of large number of elements in the occlusion dictionary. Therefore, the computational cost of SRC limits it s application in real time area, which increasingly attracts researcher’s attention to solve this issue.

1.2: Psychological Background

In 1978, Ekman et al. [2] introduced the system for measuring facial expressions called FACS – Facial Action Coding System. FACS was developed by analysis of the relations between muscle(s) contraction and changes in the face appearance caused by them. Contractions of muscles responsible for the same action are marked as an Action Unit (AU). The task of expression analysis with use of FACS is based on decomposing observed expression into the set of Action Units. There are 46 AUs that represent changes in facial expression and 12 AUs connected with eye gaze direction and head orientation. Action Units are highly descriptive in terms of facial movements, however, they do not provide any information about the message they represent. AUs are labeled with the description of the action (Fig.1).

Fig. 1: Examples of Action Units

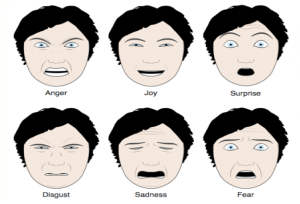

Facial expression described by Action Units can be then analyzed on the semantic level in order to find the meaning of particular actions. According to the Ekman’s theory [2], there are six basic emotion expressions that are universal for people of different nations and cultures. Those basic emotions are joy, sadness, anger, fear, disgust and surprise (Fig. 2).

Fig. 2: Six universal emotions

The Facial Action Coding System was developed to help psychologists with face behavior analysis. Facial image was studied to detect the Action Units occurrences and then AU combinations were translated into emotion categories. This procedure required much effort, not only because the analysis was done manually, but also because about 100 hours of training were needed to become a FACS coder. That is why; FACS was quickly automated and replaced by different types of computer software solutions.

1.3: Facial emotion recognition systems

The aim of FERS is to replicate the human visual system in the most analogous way. This is very thought-provoking job in the area of computer vision because not only it needs effective image/video analysis methods but also well-matched feature vector used in machine learning process. The primary principle of FER system is that it would be easy and effective. That relates to full automation, so that no extra manual effort is obligatory. It is also chosen for such system to be real-time which is particularly significant in both: human-computer interaction and human-robot interaction applications.

Besides, the theme of study should be permitted to act impulsively while data is being captured for examination. System should be intended to evade limitations on body and head movements which could also be an important source of data about shown emotion. The limitations about facial hair, glasses or extra make-up should be reduced to lowest. Furthermore, handling the occlusions problem looks to be a test for a system and it should be also considered.

Other significant features that are wanted in FER system are user and environment independence. The prior means that, any user should be permissible to work with the system, regardless of of skin color, oldness, gender or state. The latter relates to conduct the complex background and diversity in lightning conditions. Further advantage could be the view independence in FERS, which is likely in systems based on 3D vision.

- Face Detection

As it was stated earlier, FER system comprises of 3 steps. In the first step, system takes input image and does some image processing methods on it, to detect the face region. System can function on static images, where this process is called face localization or videos where we are working with face tracking. Main problems which can be come across at this step are different scales and orientations of face. They are generally produced by subject movements or changes in remoteness from camera.

Substantial body actions can also reason for severe changes in position of face in successive frames what makes tracking tougher. What is more, difficulty of background and variety of lightning circumstances can be also quite puzzling in tracking. For example, when there is more than one face in the image, system should be able to differentiate which one is being tracked. Finally, obstructions which usually give the impression in impulsive reactions need to be handled as well.

Problems stated overhead were a challenge to hunt for methods which would crack them. Among the methods for face detection, we can differentiate two groups: holistic where face is treated as a whole unit and analytic where co-occurrence of characteristic facial elements is considered.

1.3.2. Feature Extraction

Afterward the face has been situated in the image or video frame, it can be examined in terms of facial action occurrence. There are two types of features that are frequently used to define facial expression: geometric features and appearance features. Geometric features quantity the displacements of certain parts of the face such as brows or mouth corners, while appearance features define the variation in face texture when specific action is done. Apart from feature type, FER systems can be separated by the input which could be static images or image sequences.

The job of geometric feature measurement is generally connected with face region analysis, exclusively finding and tracking vital points in the face region. Possible problems that arise in face decomposition job could be obstructions and incidences of facial hair or glasses. Besides, defining the feature set is tough, because features should be expressive and possibly not interrelated.

- Recognition of Expression

The latter part of the FER system is based on machine learning theory; exactly it is the classification job. The input to the classifier is a set of features which were recovered from face region in the previous stage. The set of features is designed to describe the facial expression.

Classification needs supervised training, so the training set should consist of labeled data. Once the classifier is trained, it can distinguish input images by assigning them a specific class label. The most usually used facial expressions classification is finished both in terms of Action Units, proposed in Facial Action Coding System and in terms of common emotions: happiness, unhappiness, fury, surprise, disgust and fear. There are a lot of different machine learning methods for classification job, viz.: K-Nearest Neighbors, Artificial Neural Networks, Support Vector Machines, Hidden Markov Models, Expert Systems with rule based classifier, Bayesian Networks or Boosting Techniques (Adaboost, Gentleboost).

Three major problems in classification job are: picking good feature set, effective machine learning method and diverse database for training. Feature set should be composed of features that are discriminative and characteristic for expression. Machine learning method is chosen usually by the sort of a feature set. In conclusion, database used as a training set should be adequate and contain various data. Methods described in the literature are presented by categories of classification output.

1.4: Applications

Enormous amount of different information is encoded in facial movements. Perceiving someone’s face we can absorb about his/her:

• Affective state, connected with emotions like fear, anger and joy and moods such as euphoria or irritation

• Cognitive activity (brain activity), which can be seeming as attentiveness or boredom

• Personality features like sociability, nervousness or unfriendliness

• Honesty using analysis of micro-expressions to disclose hidden emotions

• Psychological state giving information about illnesses helpful with diagnosis of depression, mania or schizophrenia.

Due to the variety of information visible on human face, facial expression analysis has applications in various fields of science and life. Primarily, teachers use facial expression investigation to correct the struggle of the exercise and learning pace on a base of reaction visible on students faces. Virtual tutor in e-learning planned by Amelsvoort and Krahmer [26] offers student with suitable content and alters the complexity of courses or tasks by the information attained from student’s face.

Additional application of FERS is in the field of business where the measurement of people’s fulfilment or disappointment is very important. Usage of this application can be found in many marketing methods where information is collected from customers by surveys. The great chance to conduct the surveys in the automatic way could be able by using customers’ facial expressions as a level of their satisfaction or dissatisfaction . Furthermore, prototype of Computerized Sales Assistant, proposed by Shergill et al. chooses the appropriate marketing and sales methods by the response taken from customers’ facial expressions. Facial behavior is also studied in medicine not only for psychological disorder diagnosis but also to help people with some disabilities. Example of it could be the system proposed by Pioggial et al. that aids autistic children to progress their social skills by learning how to recognize emotions.

Facial expressions could be also used for surveillance purposes like in prototype developed by Hazel hoff et al.. Suggested system automatically perceives uneasiness of newborn babies by recognition of 3 behavioral states: sleep, awake and cry. Furthermore, facial expression recognition is broadly used in human robot and human computer interaction Smart Robotic Assistant for people with disabilities based on multimodal HCI. Another example of human computer interaction systems could be system developed for automatic update of avatar in multiplayer online games.

1.5: Thesis Organization

The thesis is organized as follows:

- The thesis is opened with an introduction i.e., Chapter 1, in which it is discussed about the introduction, physiological background and facial emotion recognition systems along with the Thesis organization and the Tools used for the whole project.

- Chapter 2 discussed about the literature survey in which the brief explanation of previous works is given and explained.

- Chapter 3 discussed about the proposed system in which the each part of the face was detected and the emotion of the person is detected based on extreme sparse learning. Here we use the spatial-temporal descriptor and optimal flow method to recognize the emotion.

- Chapter 4 plays key role in this project which gives the information of software that used for the project i.e. MATLAB.

- The results and discussions are presented in Chapter 5. This chapter describes the results that are obtained for the proposed system.

- Chapter 6 discussed about the advantages of the proposed system and disadvantages of the existing systems.

- Hence the conclusion and future work, references are presented in chapter 7. Then the references are mentioned in the chapter8.

1.6: Tools Used

Image processing toolbox

MATLAB R2013a(version 8)

Cite This Work

To export a reference to this article please select a referencing stye below:

Related Services

View allDMCA / Removal Request

If you are the original writer of this essay and no longer wish to have your work published on UKEssays.com then please click the following link to email our support team:

Request essay removal